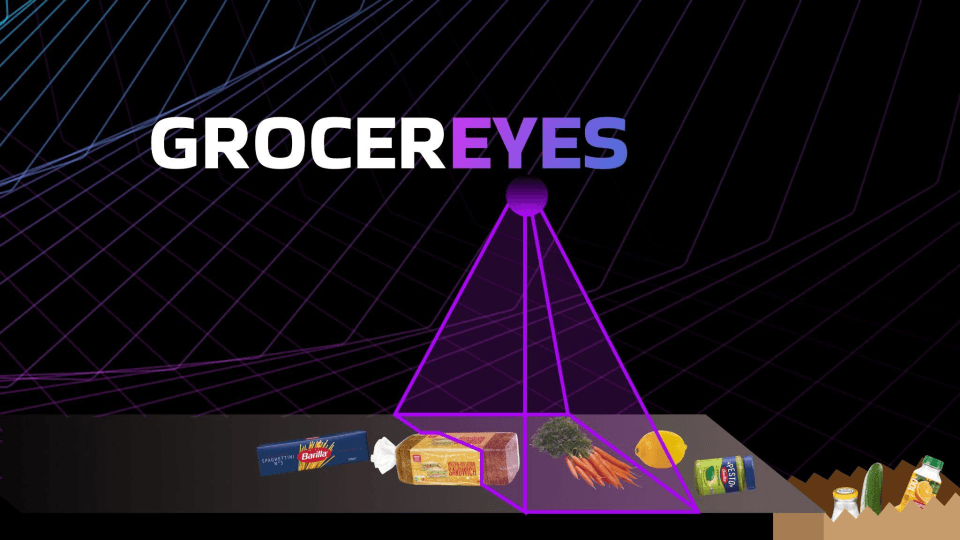

GrocerEyes

Introduction

GrocerEyes was the project my team and I created during my time at the LeWagon Data Science Bootcamp in Madrid. The last two weeks of the bootcamp were dedicated to working on a project of our choice, with everyone having the opportunity to pitch their ideas. At the end of the pitch session, a voting process determined which projects would be brought to life.

Having found many previous and recommended data science projects somewhat uninspiring due to their exclusive focus on data analysis, I decided to pitch a project that promised to be more enjoyable and ambitious to develop.

For this I used the following pitch:

"We will automate the checkout process of a supermarket."

In a nutshell, my idea received the most votes, and three fellow students joined me on this exciting journey. Under my guidance, we set out to bring this concept to life.

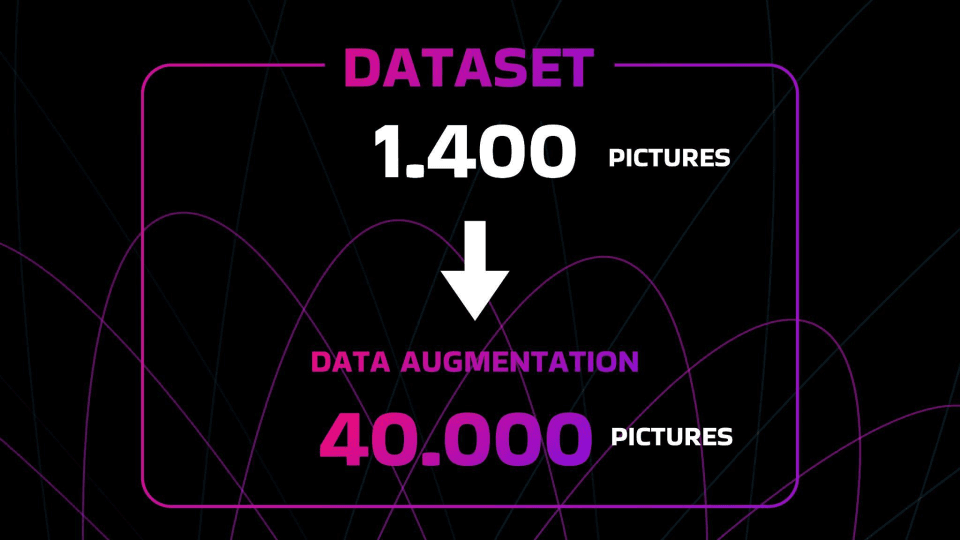

Getting Started: While some other groups were fortunate enough to find readily available datasets online, our situation was a bit more challenging. Given the localized nature of packaging, it was impossible to find a dataset that suited our needs for Spanish groceries. This led us to an interesting decision: creating our dataset from scratch.

We headed to the local supermarket and purchased a variety of groceries. Armed with a camera, we captured images of these items from different angles, amassing a total of 1,400 pictures. To enhance the robustness of our dataset, we implemented data augmentation techniques, which involved applying various transformations to the original images. For instance, we rotated images by 90 degrees, flipped them horizontally, and adjusted brightness. This effort resulted in a whopping 40,000 images in our dataset

Next up was the training of our custom model. Following some research, we opted to utilize a pre-trained model and focused our training efforts on the final layers. We selected YoloV5 as our model and conducted the training on Google Colab.

The training process itself spanned approximately 12 hours. Impressively, our model exhibited a remarkable accuracy rate, hovering at around 90% when tested on our evaluation set.

Next Steps

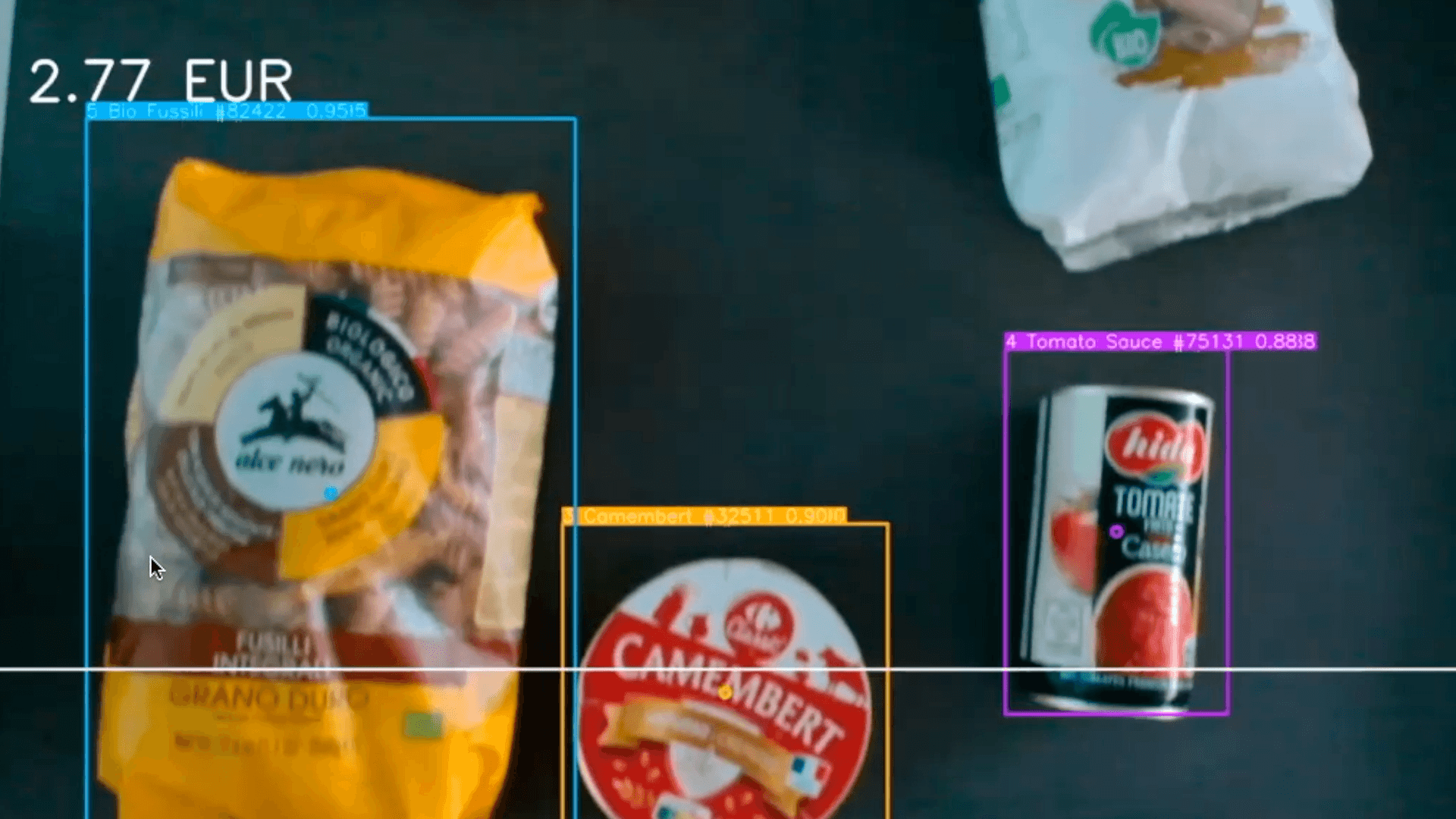

Once our model had completed its training, the next challenge was to develop the logic for real-time evaluation of items and their prices. Our ultimate goal was to enable a live camera assessment of the items currently on the conveyor belt. After extensive discussions with the team, we collectively decided to leverage a pretrained algorithm for this task. Initially, I had conceived an algorithm that would utilize the x and y coordinates of the detected objects to identify items and calculate their prices.

To bring our project to the next level, we divided our responsibilities. While the three team members delved into the complexities of implementing the live evaluation algorithm, I saw an opportunity to take this project even further. It's worth noting that most of the bootcamp attendees had minimal to no prior coding experience. In contrast, I had a solid foundation in Python and JavaScript, largely due to my previous project for Hive (you can check it out (see here)

Taking the project to another level

While we could have concluded our project with the object detection phase, I was inspired to take things a step further. I set out to write code that would accomplish the following:

- Generate a traditional paper receipt

- Send an email containing a digital receipt

- Include nutritional information along with the recepie recommendation based on the purchased items

To achieve this, collaboration and alignment with the rest of the team on the data structure were crucial. Additionally, I received assistance from another team member who took charge of identifying and implementing a suitable API for gathering recipe information.

It's needless to say that the project turned out to be a huge success, and we had the privilege of presenting it to our fellow classmates. Furthermore, our project earned recognition and was featured on the LeWagon Website.

Demo

You can find our full demo of the project here

Team

Hugh thanks to my team for making this project possible.